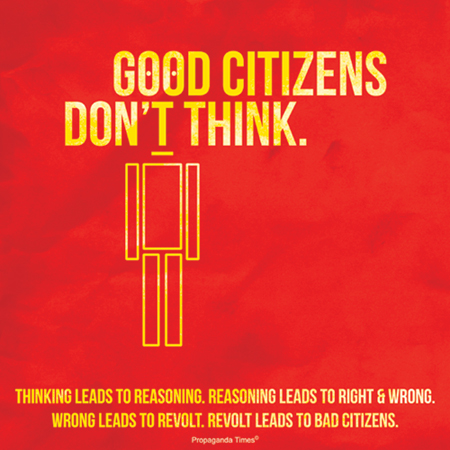

I'm tired of the malnutrition of discourse in web sites' comments sections. Pick a controversial topic [1] on a popular site, go to the comments section, and if you're like me, you'll start feeling some emotions. Disbelief, disgust, and maybe a lack of faith in how we've (dis)organized our collective thinking ability. Comments by readers on climate change stories are perhaps the worst of the lot - name calling, propaganda , but perhaps most fundamentally, a dearth of critical thinking skills. You simply see very, very little solid argumentation taking place.

I'm tired of the malnutrition of discourse in web sites' comments sections. Pick a controversial topic [1] on a popular site, go to the comments section, and if you're like me, you'll start feeling some emotions. Disbelief, disgust, and maybe a lack of faith in how we've (dis)organized our collective thinking ability. Comments by readers on climate change stories are perhaps the worst of the lot - name calling, propaganda , but perhaps most fundamentally, a dearth of critical thinking skills. You simply see very, very little solid argumentation taking place.

Some examples are The GOP’s climate change skepticism, in one groan-worthy video, Faulted for Avoiding ‘Islamic’ Labels to Describe Terrorism, White House Cites a Strategic Logic, and even my local paper has some doozies, e.g., Tony Robinson shooting: Protestors hit Madison, Wisconsin streets (video) (with gun control at least being a bona fide controversy).

I am just starting to look into the research around "hindrances due to basic human limitations" (check out Table 1 [2] from A Practical Guide To Critical Thinking) and I'm undecided whether it's possible to change minds through technical means (e.g., requiring comment authors to express some kind of well-structured, if simple, argument along with each claim), but it struck me that the thumbs up/down voting feature that's common to most of these sites exacerbates the problem. Let me take a naive look at this, the most meager form of interaction (clicking is literally the least you can do to interact) and maybe re-evaluate the value of popularity's limited usefulness on the web.

I think at its most basic, the thumbs signal expresses one person's opinion of a human artifact (person, place, thing, or utterance, say) where likability, approval, and desire are common interpretations. As a web tool, Thumbs Up/Down Style Ratings says to use it when "A user wants to express a like/dislike (love/hate) type opinion about an object (person, place or thing) they are consuming / reading / experiencing," with the value being:

these ratings, when assessed in aggregate, can quickly give a sense for the community's opinion of a rated object. They may also be helpful for drawing quick qualitative comparisons between like items (this is better than that) but this is of secondary importance with this ratings-type.

From the individual's perspective, I can see a few uses in online discussions:

- the satisfaction of expressing one's opinion,

- the ability to get attention from others,

- being able to see how others voted, which might lead to

- challenging, or more likely reinforcing, ones opinions and beliefs, and

- finding others possibly in your tribe (and perhaps more importantly, those not in it)

I suppose that from the site's perspective, the feature's value is engagement; people are excited by the above uses.

Regarding quality of discourse, what is the value of this feature? And how does it enhance or hinder critical thinking? To my mind, its contribution is all negative because opinion does not equate to fact. (However, I think I'm out of sync on this, given the current cultural and political climate where opinion is perhaps valued higher than fact.) Let's simplify the question and phrase it in terms of popularity. What is the value of popularity in online discourse? My thinking is, none. A solid argument is not based on personal feeling, it is based on evidence, support, quality of sources, etc. This is literally (well, should be) grade school material.

It's a problem, and my question is, can we replace the thumbs signal interaction feature with a better one, something that ideally counteracts the echo chamber effect? A nascent thought I had was to keep the voting feature but apply it to portions of explicitly-presented logical arguments instead of the individual unstructured utterances that currently make up comments. Briefly, this would require that comments link to an unbiased and well-structured argument (I list some sites below [3]), and it is there, rather than the comments themselves, that people would vote. However, the difference is that they'd be voting on specific elements of the argument (such as a source's trustworthiness) which we might be able to interpret as a self-disclosed bias, perhaps proudly proclaimed.

Without getting into more detail here (I just wanted to get the thumbs up/down limitations written up here), I wonder if sharing and comparing biases instead of opinions might lead to tools to help bring a tiny fraction of people a tiny bit closer to understanding each other. Instead of "Hey John, check out the asshole liberal comments on this article," would it be useful to hear "Hey John, here's my opinion on this article's argument"?

Again, I realize I'm fighting human nature [2], but what if a computer program could analyze two opposing parties' beliefs and, for example, find an area of the argument they agree on? Or find a slightly diverse group of people whose beliefs are different but close (think of a belief search space) and somehow bring them together.

Naive? Probably, and this post is rough, but I'd love to hear your thoughts on any of it.

[1] I should put "controversial" in quotes for those topics like climate change that are no longer controversial from the scientific - i.e., reality-based - perspective. And I am quite looking forward to watching the Merchants of Doubt documentary (Rotten Tomatoes review and Amazon book links), by the way. Seen it yet?

[2] From A Practical Guide To Critical Thinking: Hindrances Due To Basic Human Limitations:

- Confirmation Bias & Selective Thinking

- False Memories & Confabulation

- Ignorance

- Perception Limitations

- Personal Biases & Prejudices

- Physical & Emotional Hindrances

- Testimonial Evidence

[3] Argument tools:

Debatewise - where great minds differ

Tuesday, August 11, 2015 at 1:37PM

Tuesday, August 11, 2015 at 1:37PM  After taking a break from computing in 2009 and creating a new kind of social platform - one based on treating life as an experiment - I happily returned to CS research in 2011. I was asked to re-join the AI group at UMass where I previously helped build Proximity, a platform for machine learning research. However, that position's funding has ended (as is the way of research's ebb and flow) and I'm excited to move on to the next adventure.

After taking a break from computing in 2009 and creating a new kind of social platform - one based on treating life as an experiment - I happily returned to CS research in 2011. I was asked to re-join the AI group at UMass where I previously helped build Proximity, a platform for machine learning research. However, that position's funding has ended (as is the way of research's ebb and flow) and I'm excited to move on to the next adventure.